import tensorflow as tf

print(tf.__version__)2.15.0Arun Koundinya Parasa

March 21, 2024

This article primarily discussed on importance of broadcasting and its easy of implementation using tensors.

| Data Type | Python Type | Description |

|---|---|---|

| DT_FLOAT | tf.float32 | 32 bits floating point. |

| DT_DOUBLE | tf.float64 | 64 bits floating point. |

| DT_INT8 | tf.int8 | 8 bits signed integer. |

| DT_INT16 | tf.int16 | 16 bits signed integer. |

| DT_INT32 | tf.int32 | 32 bits signed integer. |

| DT_INT64 | tf.int64 | 64 bits signed integer. |

| DT_UINT8 | tf.uint8 | 8 bits unsigned integer. |

| DT_STRING | tf.string | Variable length byte arrays. Each element of a tensor is a byte array. |

| DT_BOOL | tf.bool | Boolean. |

print("Addition:\n", tf.add(x_new,y_new))

print(" ")

print(" ")

print("Subtraction:\n", tf.subtract(x_new,y_new))

print(" ")

print(" ")Addition:

tf.Tensor(

[[3 3 3]

[3 3 3]], shape=(2, 3), dtype=int8)

Subtraction:

tf.Tensor(

[[-1 -1 -1]

[-1 -1 -1]], shape=(2, 3), dtype=int8)

print("Multiplication:\n", tf.multiply(tf.random.normal(shape=(2,3)),tf.random.normal(shape=(2,3))))Multiplication:

tf.Tensor(

[[-0.5718114 -0.18780218 -2.0768495 ]

[ 0.29304612 0.08317164 -1.3320862 ]], shape=(2, 3), dtype=float32)print("Matrix Multiplication:\n", tf.matmul(tf.random.normal(shape=(2,3)),tf.random.normal(shape=(3,2))))

print(" ")

print(" ")

print("Matrix Multiplication:\n", tf.matmul(tf.random.normal(shape=(2,3)),tf.random.normal(shape=(3,1))))Matrix Multiplication:

tf.Tensor(

[[-1.6848389 -0.44041786]

[-0.9548799 -0.22109865]], shape=(2, 2), dtype=float32)

Matrix Multiplication:

tf.Tensor(

[[ 0.37789747]

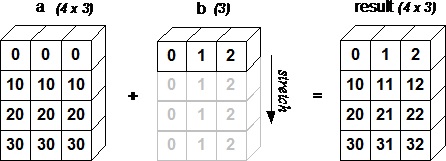

[-0.12570143]], shape=(2, 1), dtype=float32)Broadcasting is useful when the dimensions of matrices are different from each other. This is highly useful to add and subtract the matrices of different lengths.

Here’s a brief summary of how broadcasting works for addition and subtraction:

Addition: If the shapes of the two tensors are different, TensorFlow compares their shapes element-wise, starting from the trailing dimensions. If the dimensions are compatible or one of the dimensions is 1, broadcasting can occur. For example, you can add a scalar to a matrix, and the scalar value will be added to every element of the matrix.

Subtraction: Similar to addition, broadcasting allows you to subtract a scalar or a vector from a matrix, or subtract matrices of different shapes.

x1:

tf.Tensor(

[[ 0.39297998 -0.12811422 -0.60474324]

[ 0.30773026 1.4076523 -0.57274765]], shape=(2, 3), dtype=float32)

x2:

tf.Tensor(

[[0.10113019]

[1.0277312 ]], shape=(2, 1), dtype=float32)<tf.Tensor: shape=(2, 3), dtype=float32, numpy=

array([[0.10113019, 0.10113019, 0.10113019],

[1.0277312 , 1.0277312 , 1.0277312 ]], dtype=float32)><tf.Tensor: shape=(2, 3), dtype=float32, numpy=

array([[ 0.49411017, -0.02698404, -0.50361305],

[ 1.3354614 , 2.4353833 , 0.45498353]], dtype=float32)><tf.Tensor: shape=(2, 3), dtype=float32, numpy=

array([[ 0.2918498 , -0.22924441, -0.7058734 ],

[-0.7200009 , 0.37992108, -1.6004789 ]], dtype=float32)>In the above examples we have seen that broadcasting of same tensor dimension i.e.; of two dimensional.

Below, we will see the beauty of broadcasting of different dimensions.

<tf.Tensor: shape=(2, 2, 2), dtype=float32, numpy=

array([[[-1.0228443 , 0.34588122],

[ 2.188323 , -0.7523911 ]],

[[-0.56017506, -1.5636952 ],

[ 1.572252 , 1.6189265 ]]], dtype=float32)>